The ALPHA experiment at CERN has found that antimatter particles fall down in gravity just like matter particles. An open question had been if antimatter particles, which are the opposites to matter particles, would fall up instead of down in gravity. This experiment answered the question.

Antimatter is the mysterious opposite form of matter; antiparticles have the opposite electric charge to normal particles. The antiparticle of the proton is the antiproton, and the antiparticle of the electron is the positron and so on. All fermions we know of have a corresponding antiparticle. There should be an equal amount of matter and antimatter in the universe, but as far as we know the universe seems to consist mainly of matter, rather than antimatter. This discrepancy is called the matter antimatter asymmetry and it’s one of the biggest unsolved problems in physics.

According to our current understanding, the big bang should have created equal amounts of matter and antimatter, but it seems like all the antimatter has disappeared. Scientists have for a long time been looking for differences between matter and antimatter to figure out why matter dominates over antimatter in the universe. When antimatter meets matter, they annihilate. This means that its hard to study antiparticles since they disappear very quickly.

According to Einsteins general theory of relativity, all particles should interact in the same way with gravity. However, Einsteins theory was published before we even knew about antimatter, so an open question has been if antimatter interacts gravitationally in the same way as matter.

Scientists have wondered whether antiparticles could fall up instead of down. Meaning that if you held a ball made of antiparticles on earth and you dropped it, it might start falling upwards instead of downwards. This idea may seem quite absurd. There’s no reason to think that anti hydrogen atoms should fall up, but we also had no proof that they don’t. There might be a possibility that antimatter is affected with repulsive gravity. If this would be the case, it would obviously be a groundbreaking discovery, with a lot of practical applications. So, the idea had to be tested, which is what scientist at ALPHA have now done. The ALPHA-g experiment dropped some antihydrogen and saw what happened.

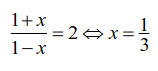

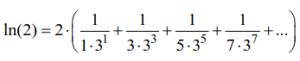

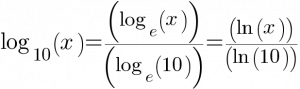

The problem for a long time was to produce and contain antimatter for a long enough time to conduct an experiment like this. But now these scientists were able to contain anti hydrogen atoms and test the effect of gravity on them. First antiprotons and positrons are produced and trapped together with the goal of making antihydrogen atoms. Antihydrogen, which consists of an antiproton and positron is the antimatter equivalent of hydrogen. The experiment traps these anti hydrogen atoms and then opens the top and bottom barriers of the trap and sees whether the particles travel up or down due to gravity. If 80% of the atoms travelled downward, they would behave the same as predicted for normal hydrogen atoms. The results showed that antihydrogen does in fact behave the same way as hydrogen in gravity.

This means that antimatter is affected by the earths gravitational force in the same way as matter. So, if you drop a ball of antimatter, it does not fall up. The result also means that Einsteins theory on the behaviour of matter under gravity also holds for matter that was discovered long after his theory was published.

The ALPHA-g result brings us closer to understanding antimatter, and subsequently understanding the origin and the structure of our universe.

Jonny Montonen

Anderson, E.K., Baker, C.J., Bertsche, W. et al. (2023) Observation of the effect of gravity on the motion of antimatter. Nature 621, 716–722 https://doi.org/10.1038/s41586-023-06527-1