In this project, we propose to develop novel models that incorporate the interpretation uncertainty of natural languages into modern neural language models. We expect that these uncertainty-aware language models will provide a much better fit for human languages and communication in general, which will become visible in practical tasks such as question-answering and machine translation.

- 2022-2024, funded by the Academy of Finland

Research Team

- Jörg Tiedemann (PI and project manager)

- Hande Celikkanat

- Sami Virpioja

- Timothee Mickus

- Raúl Vázquez

- Elaine Zosa

- Teemu Vahtola

External collaborators:

- Markus Heinonen (Aalto University)

- Luigi Acerbi (University of Helsinki)

- Wilker Aziz (University of Amsterdam)

- Ivan Titov (Edinburgh University / University of Amsterdam)

Project Setup

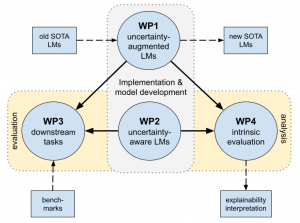

The project consists of 4 work packages and their connections are illustrated in the figure below:

WP1 focuses on the augmentation of existing state-of-the-art neural language models with uncertainty information. In WP2, we aim at the development of natively uncertainty-aware models based on Bayesian extensions of deep neural network architectures. WP3 and WP4 are concerned with evaluation in order to assess the quality and internal workings of the novel uncertainty-aware models we create throughout the project. In WP3, we will use established NLP benchmarks emphasizing tasks that require advance natural language understanding with strong ambiguities and fuzzy decision boundaries. WP4 tackles the explainability of neural language models and we aim to study the parameters and dynamics of uncertainty-aware language models.

News and activities

- The First Workshop on Uncertainty-Aware NLP will run at EACL 2024 in Malta in March 2024

- SHROOM at SemEval 2024 – a Shared task on on Hallucinations and Related Observable Overgeneration Mistakes

- Presentation of our paper on Uncertainty-Aware Natural Language Inference with Stochastic Weight Averaging at the AI Day 2023 [code]

- Research visit by Raúl Vázquez from our team at the Probabilistic Language

Learning Group in Amsterdam during October 2023 - Talk by Dennis Ulmer from the IT University in Copenhagen on “Uncertainty Quantification for Natural Language Processing: Current State & Open Questions” in our LT research seminars

- UnGroundNLP – 2022: Workshop on Uncertainty and Grounding in Language and Translation Modelling