IBM’s Deep Blue chess engine beat Garry Kasparov in 1997, becoming the first computer to beat a world champion. Deep Blue’s “intelligence” was of the kind computers excel at — calculation. It could search all possible sets of moves up to a certain depth and choose the best option.

With the advancements in artificial neural networks over the last decades, we have witnessed the birth of a new generation of chess engines that mimic the way humans learn chess: by trial and error and repeated practice.

This technique, known as reinforcement learning, was used by DeepMind 5 years ago to develop AlphaZero, a chess AI. Humans were no longer a challenge, as the advancements in raw calculation power have made smartphones more capable than the supercomputer used by Deep Blue. Instead, AlphaZero would compete against other algorithms. It beat the reigning champion at the time, Stockfish 8, and was praised for its groundbreaking strategies. The highest rated human player, Magnus Carlsen commented on AlphaZero in an interview, saying that its play could be “mistaken for creativity”. How might this “creativity” be useful elsewhere?

DeepMind’s researchers recently published a paper in the journal Nature, titled “Discovering faster matrix multiplication algorithms with reinforcement learning”, where they outlined a new application of AlphaZero for algorithmic discovery. The algorithm in question?

Matrix Multiplication

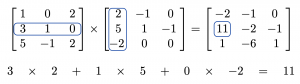

Below is an example of the traditional matrix multiplication process. To calculate the value of an element in the new matrix, you just need to calculate the sum of the element-wise products of the same row and column in the first and second matrices respectively.

It might seem impossible to do this any faster than what we just saw, after all we need to calculate the values individually. Mathematicians agreed, and for centuries, the consensus was that the method shown above is the best possible.

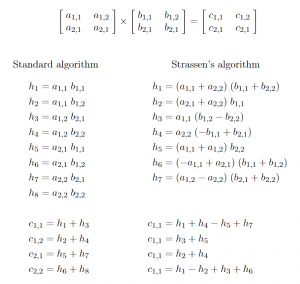

A 1969 landmark paper by Volker Strassen proved that this was not the case. How did he manage to do this? It is actually remarkably simple. As did he, we can examine a small matrix such as 2 x 2 to understand the method.

As we can see, Strassen’s algorithm uses 7 multiplications, 1 less than the traditional method. This is the minimum possible for a 2 x 2 matrix.

Now you might ask, is this reduction of 1 in multiplications worth all the extra additions that come with this method? Indeed, it is not, for matrices of size 2 x 2. But the best part about Strassen’s algorithm is that it works for any size matrix (even non-square ones, but that requires some extra work). In the example above, we treated the elements of A and B as real numbers, however, they can also be submatrices of A and B. Suppose the original matrix is size N x N, then each element would represent a matrix of size N/2 x N/2, one corner of the original. This division can be done recursively until the submatrices are small enough (depending on the implementation). Then we can switch to standard matrix multiplication and compute the final result.

Beyond the 2 x 2 case, Strassen’s algorithm is not the most optimal, and there are many faster known algorithms. The minimum possible number of multiplications is unknown for all sizes other than 2 x 2. In fact, one of the most important open problems in computer science is the general case of an efficient algorithm for computing the product of any two finite dimensional matrices. AlphaTensor cannot prove this, so mathematicians can rest easy knowing their jobs are safe. Nevertheless, the program is capable of finding ways to multiply small matrices more efficiently. It rediscovered algorithms like Strassen’s, and also found novel algorithms that surpassed the best human designed algorithms.

How?

In simple terms, the researchers turned matrix multiplication into a single player game, where AlphaTensor would try to solve a puzzle in as few legal moves as possible. The resulting sequence of moves represented a provably correct algorithm that could be used to multiply any two matrices of the inputted dimensions. The game is incredibly hard, since the number of possible algorithms for even a 3 x 3 matrix is greater than the number of atoms in the universe.

Applications

We have now talked at length about matrix multiplication, so one might consider what this is actually useful for. Matrix multiplication is fundamentally related to linear algebra, which has many applications. For example, a matrix multiplication can represent any geometric transformation e.g. rotation of any object, which makes them very useful for 3D graphics. Other applications include speech recognition, weather forecasting and data compression. Matrix multiplication is so widely used, that even minor improvements have major impacts. Organizations and businesses around the world spend billions of dollars on developing computing hardware for efficient matrix multiplication, so it can have real economic impact as well.

The future

The algorithms found by AlphaTensor are both useful and interesting, but even more so, the approach of using reinforcement learning for algorithmic discovery is very promising in terms of its possible future applications. It demonstrates the power of neural network based approaches for optimizing pretty much anything, whether it be matrix multiplication or chess, or any of the many more applications yet to be developed.

Sources:

Fawzi, A., Balog, M., Huang, A. et al. Discovering faster matrix multiplication algorithms with reinforcement learning. Nature 610, 47–53 (2022). https://doi.org/10.1038/s41586-022-05172-4

Fridman, L., Carlsen, M. Magnus Carlsen: Greatest Chess Player of All Time | Lex Fridman Podcast #315 [Video] (2022). YouTube. https://www.youtube.com/watch?v=0ZO28NtkwwQ&t=1634s

Kasparov, G. Chess, a drosophila of reasoning. Science, 362(6419), 1087–1087 (2018). https://doi.org/10.1126/science.aaw2221

Pandolfini, B. Kasparov vs. Deep Blue: The historic chess match between man and Machine. Fireside (1997).

Strassen, V. Gaussian elimination is not optimal. Numer. Math. 13, 354–356 (1969). https://doi.org/10.1007/BF02165411

Author:

Samuli Näppi

Samuli – it’s amazing that humans are able to learn and become expert at complex games, but it seems like computers are catching up to us!

-Edie