The purpose of this research was to develop a proof-of-concept of how consumer generated interpretations of everyday sustainability in social media could be utilized in business settings. Main tasks were data acquiring, processing, and analyzing as well as presenting the results to academia in Finland and abroad as well as to members of interest groups from business.

On the first stage of this project, I went through some previous research on consumer generated social media data use to learn how to acquire such data and to find out whether anyone has done this type of research before. Quite soon I realized that MORE project is unique since it focuses on networks of tags rather than popularity or relationship between actors on the platform. Practically speaking, I had to be careful when going through the posts on Instagram so that the ones with clear commercial interests behind them would not be accepted into the data. Here, the advice from our colleagues at the University of Helsinki concerning our problems with data acquisition was highly valuable as it became clear that most platforms are not very reliable when it comes to large and sensitive data files.

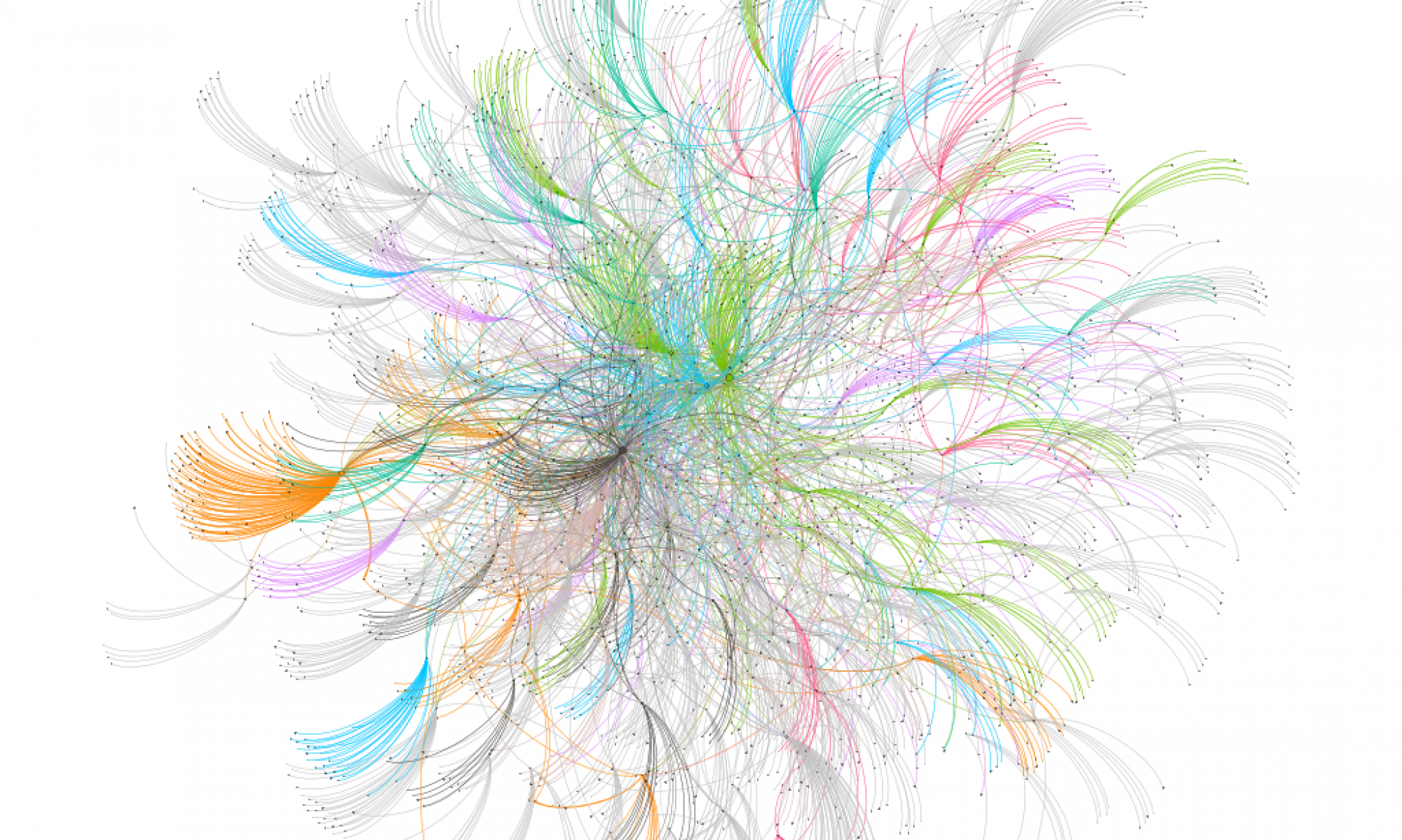

The following steps with the data required careful deliberation with processing and analyzing. Some of the rarely used tags had to be pruned from the network to make it more presentable. On the other hand, too few tags in the first place may lead to overemphasizing of individual posts. This could be seen especially with Twitter preliminary results. Hence, one should be more careful with the amount of data in future research despite whether the focus is on conversations or revealing large phenomena.

During these 11 months we organized three workshops for the company representatives participating in MORE project. The aim of the workshops varied from presenting the quality of the selected social media platforms and data to productive brainstorming inspired by final network visualizations.

At the first MORE workshop in May, we presented some of the Instagram data and preliminary results from Tumblr. The presentation provoked enthusiastic discussion among the participants and a lot of ideas about the future utilization of the method. For the second MORE workshop in September, we prepared a Tumblr data network visualization and revealed a few tags that represent the platform’s character as a creative and undergroundish individuals’ playground.

The data from Instagram, Tumblr and Twitter were analyzed as individual networks for the third and final workshop in November. Also, a slide of weak signals was created. We compiled four questions on Miro platform and participants were divided into three groups based on their department they represent at KONE. They were asked to type an answer to each of the four questions about the insightfulness of the social media network visualizations and the utilization of this new knowledge from the research in their own work. As could be imagined, lots of real-life stories and ideas were shared during this workshop. Personally, I found this workshop very intriguing since it brought our months long work and KONE employees’ practical knowledge on elevator business together.

A summary of the findings collected in the last workshop was presented in January at the Kone Technology & Innovation Talks event held at Kone’s headquarters in Keilaniemi, Espoo. A whopping 150 participants gathered around their computers to listen to the steps and findings of the MORE project. After this, Jaana Hyvärinen from KONE took us for a little tour in the buildings and finally, on top of the main building with one of the many elevators.

This project has involved various tasks from studying and learning from previous research to enjoying the fruits of the whole process by having conversations about the networks with everyone involved in workshops and other meetings in person and online. My part of the work ends here but Päivi and Petteri will continue with the project till the end of June 2023. I thank them for offering me this opportunity to participate in their research project and for all the good lessons I received from them and from the process itself. I hope this pioneering research is useful for the future researchers and its contribution to the sustainability research field a welcomed addition.