Imagine for a second being in the Amazon rainforest. You can hear the river flowing, smell some flowers whose names you probably can’t even pronounce, and there’s a mosquito buzzing around your ear. And then you see it – a majestic creature all covered in dark spots, a jaguar. Now let me take you for a moment to the frozen land of the Arctic Circle. The snow is dazzling, and you’ve never been so cold in your life, but it doesn’t really matter because the only thing you can focus on is a beautiful polar bear (whose fur is surprisingly more yellow than you thought it would be).

Now let’s take a step back and return to the brutal reality. Do you know that in the course of the next century these animals may become only a memory? This means that your great-great-grandchildren may not know what they look like. Maybe you’ll even be alive then, and you’ll have a chance to show them an old-fashioned selfie you took at the zoo in 2022.

Needless to say, it’s a topic of great importance. That’s what biodiversity loss is all about: the extinction of species in smaller areas as well as worldwide. The topic is obviously not new, and so research on it has been conducted for years. But it’s not enough. How can a policy fighting animal extinction really make a difference if it doesn’t tackle the most important reason for the problem, because the bigger picture is missing? That’s why the article published in November of this year in Science Advances journal is a game changer.

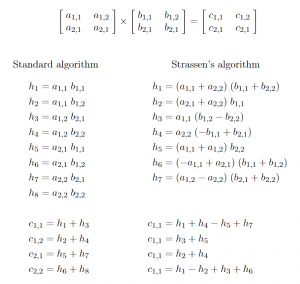

Now, if you’re imagining a bunch of scientists in white lab coats mixing up some colourful substances and making things blow up, that’s not what happened here. Thirteen dedicated researchers screened 45,162 studies, reading 575 of them in full! Can you even imagine going through almost 50, 000 studies? If you’re thinking in the book context, it’s like half the number of books there’re in the Helsinki Oodi library! And not even all of the 500 chosen studies were included in the final data set; it was only the 163 most relevant ones. Of course, that’s not the end, because in order to be compared in the general ranking, all this information needed to be converted first. I’m feeling tired even thinking about it, and we haven’t got to the maths part yet!

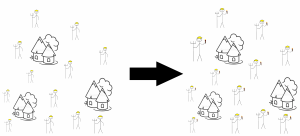

So, after all that research, what’s the most important factor in animal extinction? Can you take a guess? If your answer was “Well, obviously climate change, I hear about it on TV all the time, duh!”, then you’re very, very wrong. Our all-time winner is land/sea use changes, which is basically a fancy way of saying that people take a piece of forest and decide to make it farmland (or a factory, or… well, you get the point). Then, going head-to-head, are pollution and direct exploitation of natural resources. Climate change is far behind them. But don’t let it fool you, since it’s as dangerous as the others! First and foremost, it intensifies so quickly and rapidly that we can’t easily get a hold of it. Another thing to consider is that all the factors are connected, so to focus on one while making anti-biodiversity loss policies is like trying to take the card from the very bottom of the house of cards you’ve just made – it’s going to fall. And, if things weren’t complicated enough, the hierarchy of these factors varies across different continents and changes significantly for oceans!

Ok, but what’s next? What’s the conclusion here? Well, above all we need to realise how complicated the problem is, and that it can’t be solved by combating climate change alone. In this sense, current policies trying to take care of the factors one at a time can lead to overlooking the bigger picture. For example, one of the suggested solutions for helping with climate change is to focus on the development of croplands that can be used to produce biomass and then bio-energy. But what about all the animals that called this land, the land we’ve just changed into cropland, home? That’s why we need to think about it smartly. After all, what is the use of stopping climate change if there’re no animals left? We may as well move to Mars and give it a rest. But that’s a topic for another post. Until next time!

Source:

Jaureguiberry, Pedro, et al. “The Direct Drivers of Recent Global Anthropogenic Biodiversity Loss.” Science Advances, vol. 8, no. 45, 2022, pp. eabm9982–eabm9982, https://doi.org/10.1126/sciadv.abm9982.

Author:

Ala Żukowska