Found in Translation 2024

The FoTran project is going towards its end and this event will celebrate the project, co-located with the PhD defense of one of our team members, Aarne Talman.

- Date: Thursday, February 22, 2024

- Place: University of Helsinki, Central Campus

- morning session: Soc & Kom, room 210, Snellmaninkatu 12, Helsinki

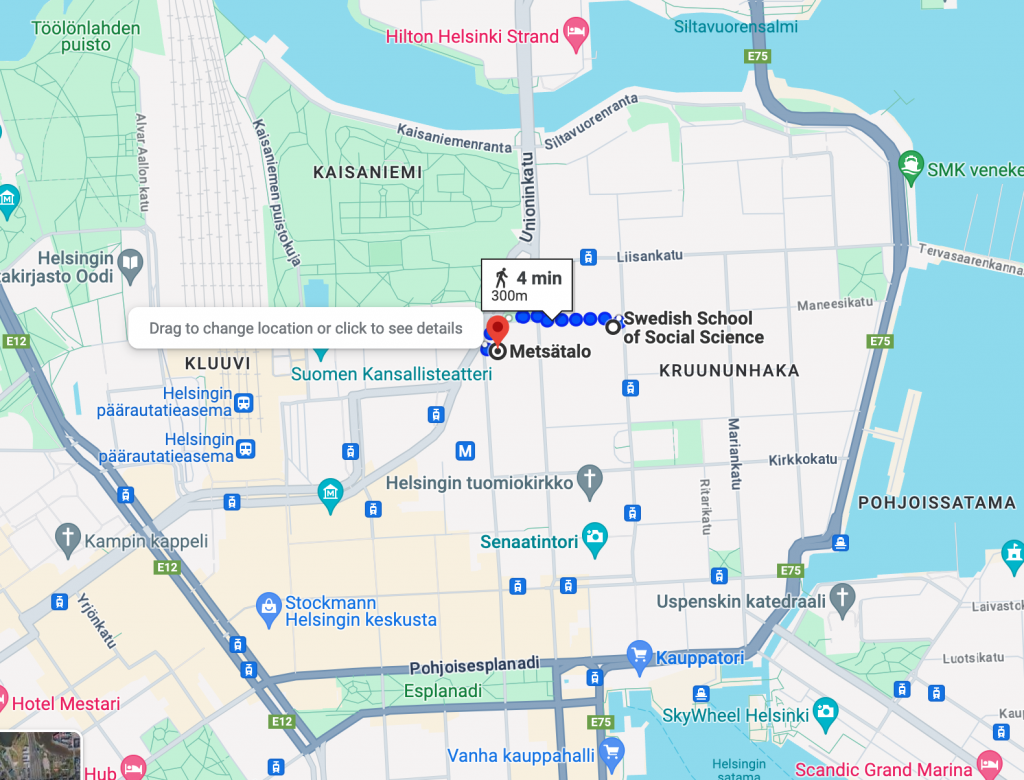

- afternoon session: Metsätalo, room B214 (hall 4), Unioninkatu 40, Helsinki

- Registration: There are no fees for participation but we would appreciate your registration to help with the logistics.

- Live stream of talks: Zoom

Invited speakers

- Vered Shwartz (University of British Columbia)

-

- Vered Shwartz is an Assistant Professor of Computer Science at the University of British Columbia, and a CIFAR AI Chair at the Vector Institute. Her research interests include commonsense reasoning, computational semantics and pragmatics, and multiword expressions. Previously, Vered was a postdoctoral researcher at the Allen Institute for AI (AI2) and the University of Washington, and received her PhD in Computer Science from Bar-Ilan University. Vered’s work has been recognized with several awards, including The Eric and Wendy Schmidt Postdoctoral Award for Women in Mathematical and Computing Sciences, the Clore Foundation Scholarship, and an ACL 2016 outstanding paper award.

- Alessandro Raganato (University of Milano-Bicocca)

-

- Alessandro Raganato is a tenure-track Assistant Professor (RTDb) at the University of Milano-Bicocca, in the Department of Informatics, Systems and Communication (DISCo). He is a member of the IKR3 Lab headed by Prof. Gabriella Pasi, working on Natural Language Processing (NLP). Previously, he was working at the FoTran project in Helsinki and did his PhD at the SapienzaNLP Lab of the Sapienza University of Rome under the supervision of Prof. Roberto Navigli, as a member of two ERC-funded projects (MultiJEDI and MOUSSE).

- Marianna Apidianaki (University of Pennsylvania)

-

- Marianna Apidianaki works as Senior Research Investigator in the Department of Computer and Information Science (CIS) at the University of Pennsylvania in Philadelphia, on leave from the French National Research Center (CNRS) where she holds a tenure researcher position. She is also associated with the NLP group in the Department of Informatics at the Athens University of Economics and Business. From 2019 to 2021, she worked as Senior Researcher in the Language Technology lab at the University of Helsinki. Since 2017, she holds an adjunct appointment in the Computer and Information Science Department at the University of Pennsylvania.

Program and schedule

The event is open for anyone and we will also stream the talks. More information coming soon. The tentative program looks like this:

- 9:30 – Morning coffee

- 10:00 – Welcome and a short background on the FoTran project [video]

- 10:30 – Alessandro Raganato (University of Milano-Bicocca)

- Title: Text Representation Odyssey: Sailing across Neural Machine Translation, Multimodal Tasks, and Text Hallucinations [video]

- Abstract: This presentation summarizes my previous and current work on text representations and beyond. The first part of this talk will be about Neural Machine Translation, delving into the capabilities of the attention mechanism in Transformer models. In the second part, we shift gears and we will dive into a recently shared task on Visual Word Sense Disambiguation and 3D point cloud matching. Finally, I will give an overview of ongoing work on detecting text hallucinations.

Given the diverse range of topics, the aim is to bring forth these materials to initiate a fruitful discussion with the audience.

- 11:15 – Marianna Apidianaki (University of Pennsylvania)

- Title: Lexical semantic knowledge in a contextualized embedding space: Insights, challenges and future work

- Abstract: In this talk, I will present work I carried out while in FoTran on the topic of lexical semantic knowledge representation. I will explain the benefits of using contextualized representations to study lexical semantics and will present the methodology that can be used to address different types of knowledge. I will then explain some challenges and limitations posed by contextual language models for this type of study. These are related to difficulties finding out the knowledge contextualized representations encode due to their nature and the geometry of the constructed embedding space; models’ high sensitivity to the prompts used; biases large language models learn from the data they are trained on; the content of semantic resources used for evaluation; and the intricate relationship between probing and explainability.

Lunch break (Restaurant Bro at the Hilton Strand hotel)

- 14:00 – Vered Shwartz (University of British Columbia)

- Title: Reality Check: Natural Language Processing in the era of Large Language Models [video]

- Abstract: Large language models (LLMs) contributed to a major breakthrough in NLP, both in terms of understanding natural language queries, commands or questions; and in generating relevant, coherent, grammatical, human-like text. LLMs like ChatGPT became a product used by many, for getting advice, writing essays, troubleshooting and writing code, creative writing, and more. This calls for a reality check: which NLP tasks did LLMs solve? What are the remaining challenges, and which new opportunities did LLMs create? In this talk, I will discuss several areas of NLP that can benefit from but are still challenging for LLMs: grounding, i.e. interpreting language based on non-linguistic context; reasoning; and real-world applications.

- 15:30 – Poster/demo session with snacks and refreshments

- OPUS – open resources and tools for machine translation

- Releasing the MAMMOTH – a framework for modular multilingual translation models

- The OPUS-MT Dashboard A Toolkit for a Systematic Evaluation of Open Machine Translation Models

- Uncertainty-Aware Natural Language Inference with Stochastic Weight Averaging

- Unsupervised Feature Selection for Effective Parallel Corpus Filtering

- …

19:00 – Dinner (restaurant Zetor)

FoTran PhD Defence – Aarne Talman

- Date: Friday, February 23 at 13:15

- Place: Room 303, Unioninkatu 33, Helsinki

- Dissertation: http://urn.fi/URN:ISBN:978-951-51-9581-4

More info about the defence is available in this press release.