Together with researchers from the Tampere University of Technology and Aalto University, we have recently been studying the continuous deployment phenomenon from different angles. In our latest installment, we studied the toolchains and the development processes of software intensive companies based in Finland. We tried to figure out what kind of development and deployment pipelines companies have, how well the development stages are automated with tools and to explore the relationship between the usage of tools and release frequency in practice. The article Improving the delivery cycle: A multiple-case study of the toolchains in Finnish software intensive enterprises was just published in the December 2016 issue of Information and Software Technology.

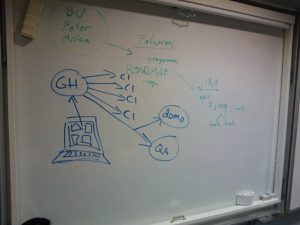

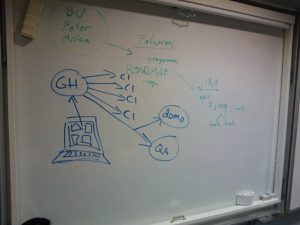

A process picture drawn by a company representative in an interview

Our case study data consists of information collected from 18 cases (17 companies) using semi-structured interviews as the data collection method. Many of the companies are from the Need for Speed research program which we have been part of since 2014. In the interviews, we asked them to draw (yes, with an actual marker or a pen) their development processes and name the concrete tools they use in each development stage. We also inquired of their release and deployment protocols. Using thematic analysis, a toolchain for each case was built from this data.

Looking at the results from the cases, tools from several categories could be considered as the de facto standard for software development. Version control and build tools fit in this category. Then again, many of the development stages were completed without any automatic tools at all, requiring manual intervention or omitted altogether as development activities. Fully automated toolchains were rare and deployments were often done manually. Acceptance testing is one example which was missing from the toolchains of companies as an automated activity.

Fastest of the companies were able to release software pretty much daily. Some of the slower companies had the ability to release once a month or so. The actual production release frequency differed, however, from the ability to do a release. Sometimes the actual release cycles were longer than a year depending on the domain. Domain differences can make quite a difference and the gaming companies stand out in their choice of not having so many automated tools such as continuous integration. It seemed that companies with more complete toolchains were on the faster end when comparing the release frequencies so perhaps the automated toolchains do help in increasing the release frequency but the relationship is not a simple one. An internal capability to release in the range of two weeks can be achieved with relatively few tools and the release frequency can also be low when a company has a solid toolchain in use.

We can conclude that a good, automated, toolchain can be an asset if a company wishes to strive for continuous deployment or to generally improve their release capability. Cultural factors should not be overlooked but tools might just help in the process.

If you want to read the full story behind the study, go read the article from Elsevier. Elsevier has provided us with a link that gives free access to the article until November 25th 2016 so you have a month to check out the article after which normal Elsevier subscription rules and fees apply. I hope you enjoy reading the article and I hope to hear any comments you might have on the subject.

Simo Mäkinen, Marko Leppänen, Terhi Kilamo, Anna-Liisa Mattila, Eero Laukkanen, Max Pagels, Tomi Männistö, Improving the delivery cycle: A multiple-case study of the toolchains in Finnish software intensive enterprises, Information and Software Technology, Volume 80, December 2016, Pages 175-194, ISSN 0950-5849, http://dx.doi.org/10.1016/j.infsof.2016.09.001. Open access link http://authors.elsevier.com/a/1Tqqq3O8rCGzPw (expires 25th November 2016).

Wishing all the readers a colorful autumn,

Simo Mäkinen

University of Helsinki

Department of Computer Science