We arranged seven week “Exact Greenhouse” courses at the Department of Computer Science of the University of Helsinki between 2014 and 2016. This course attracted a total of 47 students with different skill sets which were interested in building a greenhouse that was maintained by microcontroller-based devices. There were no other requirements for attendance except for that students should have completed a BSc degree in any of the specialisation lines of computer sciences. This requirement was set because participants would benefit from knowledge that typically develop during their BSc thesis work.

Greenhouse technology was chosen as the topic for the abundance of creative ideas and tutorials that could be found online. As industrial systems for agricultural automation have existed for decades, a wide range of small scale consumer goods were available. Many of them integrated mobile interfaces and social aspects into gardening, providing rich inspiration for innovative product design. As for the numerous online tutorials for do-it-yourself prototyping, evaluation of possi ble technical architectures was easy already at a very early stage.

ble technical architectures was easy already at a very early stage.

A Facebook course page was established and inspiring ideas with instructions for completing first milestones were communicated through it. For those students that did not use Facebook, the same information was delivered through email. This blog post summarises learning outcomes of the courses throughout the three years.

You can find the story of the research lab facility here.

The first course in 2014 attracted 12 students.

Course page.

| Problem | Solution | Microcontroller | Members |

| Maintainers want to view the status of the facility to decide which maintenance tasks are required in the future. | A web service that provides a graph visualization for any IoT device that is configured to push data through its general purpose API. | Arduino Duemillanove | 1 |

| A maintainer needs to be alarmed if plants dry up. | A product concept for a smart flowerpot container with a water reserve. | Electric Imp | 2 |

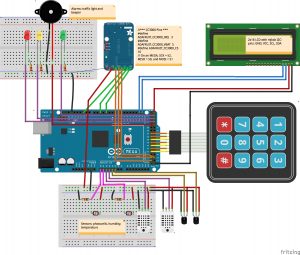

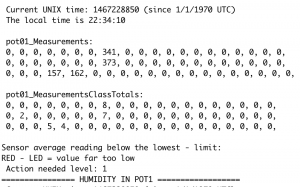

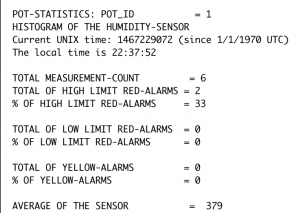

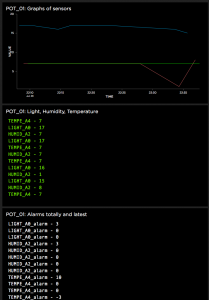

| Humidity sensor unit to be placed in soil. The system alarms maintainers by creating a sound, helping to locate the plant when visiting the greenhouse. | Arduino Uno | 1 | |

| The greenhouse gets too hot and humid during the afternoons. If doors are left open, it will get too cold during the night. | A six point temperature and humidity sensor system | Intel Galileo | 1 |

| An automated fan system, using data from the previous project | Intel Galileo | 1 | |

| Hydroponic cultivation is failing constantly for an unknown reason. | A device that measures a wide range of environmental variables from air and water. | Arduino Yun | 1 |

| Greenhouse maintenance team wants to know who has visited the greenhouse recently. | A NFC keychain system to track visitors. | Raspberry Pi | 1 |

23 students enrolled to the second course in 2015.

Here are their projects:

| Problem | Solution | Microcontroller | Members |

| Plants grow unevenly as sunlight enters the greenhouse from one direction only | A light-sensitive, rotating platform. | Arduino Yun | 2 |

| Greenhouse maintainers may not be available at all times. | Automated growing system that adjusts flow of water from a large tank and sends pictures and state information on Twitter. | Raspberry Pi | 2 |

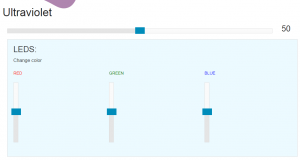

| Plants need different kinds of light for efficient growth. | Sapling container with adjustable lightning. | Raspberry Pi | 3 |

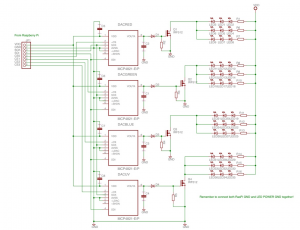

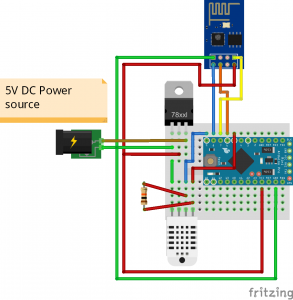

| Different plants consume water at different pace. | A set of independent sensor modules for one microcontroller unit. | Arduino Pro Mini | 2 |

| As the amount of plants increase or decrease, several maintenance tasks are needed. | Hydroponic growing platform that can be easily extended as the farm grows. | Arduino Uno | 3 |

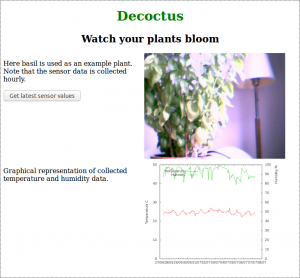

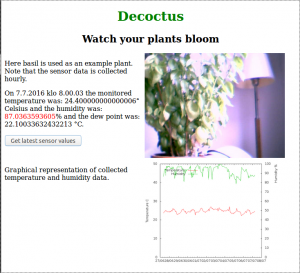

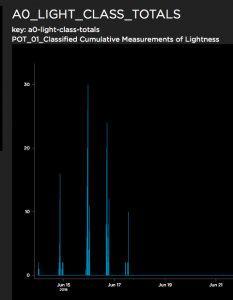

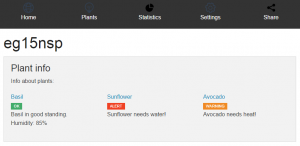

| Maintainers want to overview the status of the facility to evaluate which maintenance tasks are required. | A multifaceted web service providing a graphical visualization. | Arduino Yun | 2 |

| Temperature of the greenhouse gets very high in the afternoon. If the air is moist, soil and plants may develop mold. | Automatically functioning pulley system that adjusts ventilation according to temperature and humidity. | Arduino Yun | 2 |

| N/A | Device measures air temperature, humidity, CO2 | Arduino Uno | 3 |

| Device measures soil temperature, humidity and light | Arduino Uno | 1 |

The last course in 2016 attracted 12 innovators.

Course page.

| Problem | Solution & link to the project page | Microcontroller | Members |

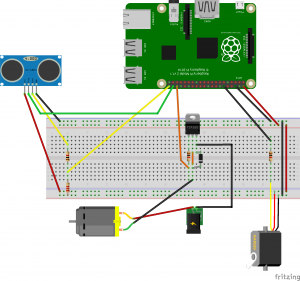

| The greenhouse attracts pigeons | Automated movement sensing water gun to deter the pigeons. | Raspberry Pi | 1 |

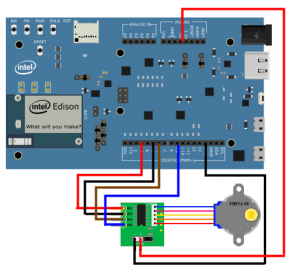

| Plants need different kinds of light and shade periods for efficient growth. | Automated system to control built-in lights and shades | Intel Edison | 1 |

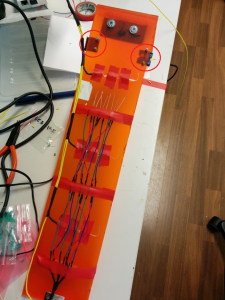

| Maintainers need to be notified when soil dries. Maintainers want to reconfigure the device to match needs of different plants. | Automated system to control built-in lights and shades | Arduino Mega | 2 |

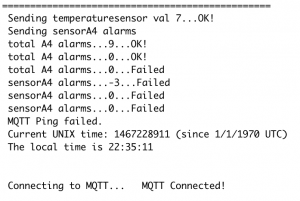

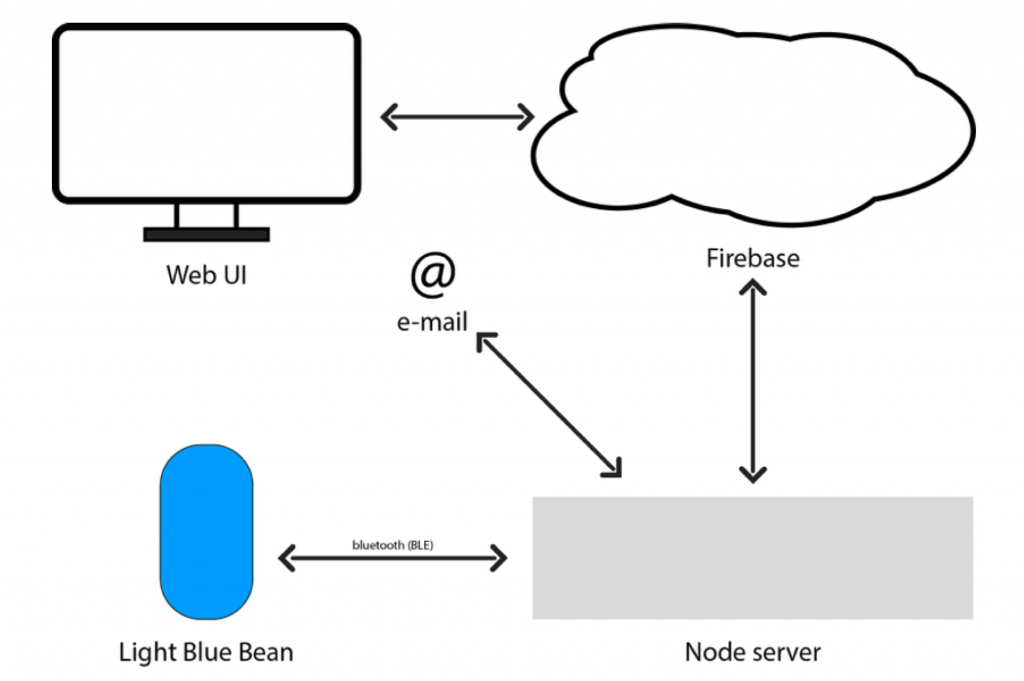

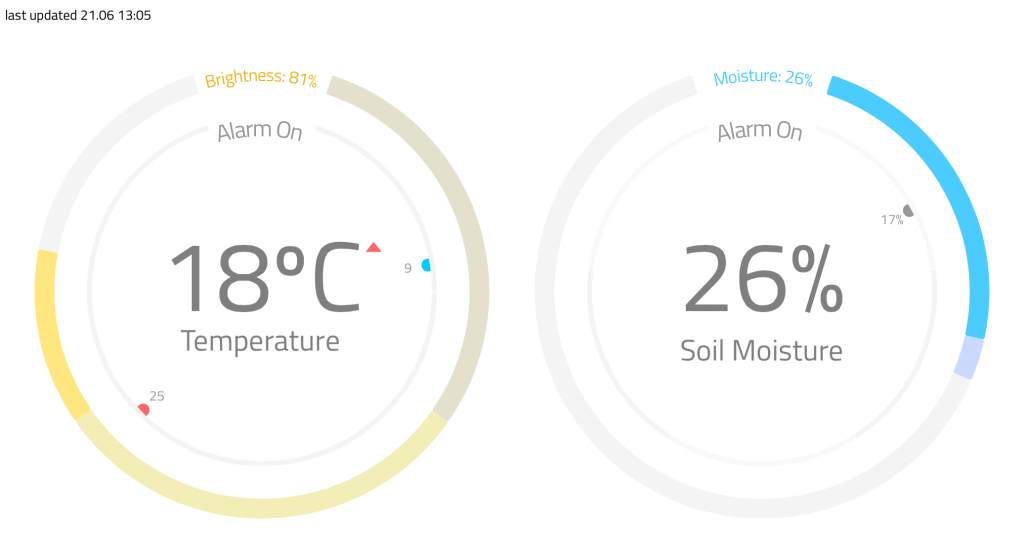

| Maintainers need to overview the state of the facility and be alarmed if a reaction is needed. | Advanced web visualisation prototype | Lightblue bean | 1 |

| A system that monitors temperature and humidity of soil. Image analysis tool for identifying changes in the look of the plants. | Raspberry Pi | 1 |

Publications

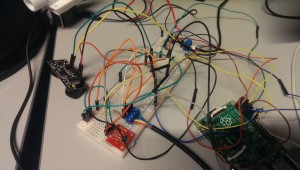

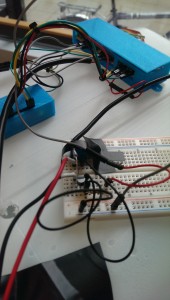

This article shows specifics of the hardware required to arrange a similar course and the first year’s course syllabus.

“Blending Problem-and Project-Based Learning in Internet of Things Education: Case Greenhouse Maintenance.” Proceedings of the 46th ACM Technical Symposium on Computer Science Education. ACM, 2015.

This article summarises our learnings from teaching the three courses and offers key take-aways.

“Assessing IoT projects in University Education – A framework for problem-based learning.” Conference: International Conference on Software Engineering (ICSE), Software Engineering Education and Training (SEET), 2017

Many thanks to the awesome research and teaching team.

Hanna Mäenpää, Samu Varjonen, Arto Hellas et al.