20.9.2018 was historical day as then the 1st international workshop on reading music systems was held. From the National Library of Finland there were two participants in order to hear about music recognition and to get new insights on what kind of new methods researchers have come up with machine learning or with optical music recognition (OMR).

The lightweight proceedings of papers can be found from Worms homepage. All of the papers went through a light-weight review in the OpenReview-platform, after which authors could adjust they papers accordingly.

Notes from organizers’ (Jorge Calvo-Zaragoza) opening words about background of the workshop

He mentioned, that despite OMR has been researched a while, but there is still no focused community. The of independent research seems to stay independent even though there are used different approaches, data formats, evaluation criteria and even datasets, but very few re-uses of previous works. This is also something that all hackathons in general seem to end up – hackathons always end up with new ideas, so then all the old ideas are left in the sides.

History of WorMS: In 12th IAPR intl workshop on graphics recognition (GREC’17) , a number of IMR people happened to meet. There after some discussions, they came to conclusion that OMR should define itself, and also probably find its proper place in the crux of the different research areas.

So in order to widen the user-base, the workshop was defined to be about systems that read documents of written music. Not strictly exclusive of OMR, but instead to cover any computational task for which the objects of study are related to written music document fits:

- omr

- information extraction

- keyword spotting

Workshop on Reading music systems

- The first edition is held as a satellite of ISMIR’18

- there exists several events about machine learning, which can be applied to document analysis.

Session 1.

Jan Hajič jr. from Charles University had a thought-provoking talk on the intrinsic evaluation of the OMR. Sheet music has many aspects to monitor and there might not be a one common metric to rule them all. For example, one eye-opening example was that there is no good “edit-distance” for music scores.

In a way, many of the things did sound so very similar as the text recognition errors, which is quite familiar field from the post-processing of digitization or which you might have seen when analysing texts of the old Finnish newspapers.

Alexander Pacha from TU Wien aimed to the building of a community of OMR and strived to support reproducible research via coding conventions and just by promoting the idea of sharing datasets and code with appropriate licenses. These OMR datasets can be found here: https://apacha.github.io/OMR-Datasets/ , which should cover at least some state of the art quite well. As a summary he emphasized these rules as a staring point:

“Publish your datasets , strive for compatibility, prefer simple encodings and formats”

Session 2: Applications and Interactive Systems

|

Interesting software from a group doing pattern recognition and ML who then advanced to OMR. The medieval scores are not easy to understand by modern musicians. The system does layout analysis, symbol detection & recognition. |

|

Pixel.js is a web based app to correct the output of image segmentation processes (Saleh et al.) |

… |

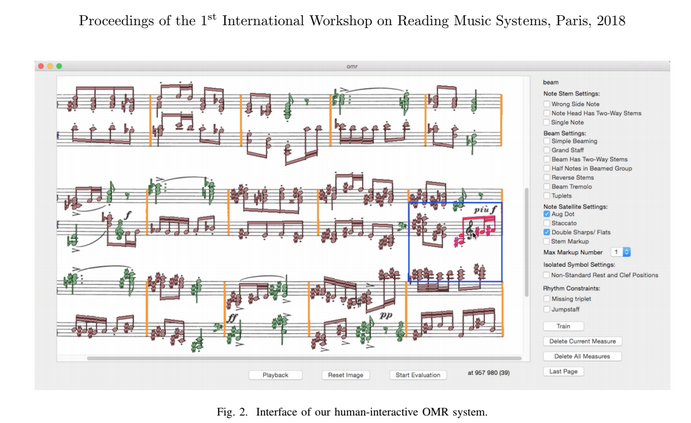

Aims to recognize symbols, chords, beam groups etc. Involving end-user to get to more granular level. |

[1] Choi et al. figure 2. Interface of human-interactive OMR system.

| A Starting Point for Handwritten Music Recognition by Arnau Baró, Pau Riba, Alicia Fornés | |

| Music Symbol Detection with Faster R-CNN Using Synthetic Annotations by Kwon-Young Choi, Bertrand Coüasnon, Yann Ricquebourg, Richard Zanibbi | Idea was to do symbol recognition and utilize faster R-CNN in that task. SmuFL was used for the music font layout. Also mentioned was DocCreator which was a system developed for creation of ground truth. |

| DeepScores and Deep Watershed Detection: current state and open issues by Ismail Elezi, Lukas Tuggener, Marcello Pelillo, Thilo Stadelmann | The target data used multiple ligthning conditions and multiple different printers for example, to get variance, which can be difficult for software. |

and then finally the session 4. about user perspective.

It was interesting to be in a ‘user track’ as also library as such as users as all digitisation is done to support the public and the researchers. But in a way it was very accurate as we would very much like to use existing research, methods and datasets and just find ways how to efficiently integrate them to our own internal workflows.

| Digitisation and Digital Library Presentation System – Sheet Music to the Mix Tuula Pääkkönen, Jukka Kervinen, Kimmo Kettunen | Our talk to explain initial concept how enrichment workflow could operate with sheet music and how to integrate “locating” of sheet music to the existing search of the contents of the digital materials. |

| Music Search Engine from Noisy OMR Data by Sanu Pulimootil Achankunju | An state-of-the art description how Bavarian State Library has enabling improved search and use of famous German composers. |

| How can Machine Learning make Optical Music Recognition more relevant for practicing musicians? by Heinz Roggenkemper, Ryan Roggenkemper | An eye-opening talk about the need of musicians. The OMR, pitch recognition should be practically perfect to be fully useful. |

Final thoughts

All in all very good workshop. In the side discussion with some of the participants we got also hints about possible datasets and code to use, so thank you everyone for those. As one interesting topic also the IIIF protocol popped up in the final wrap-up as there was just recently created an AV module for it, which enables the vision that was explained in our talk – having everything integrated, the music sheet, the tracking of played notes and even video of having someone playing that said note. IIIF is turning up to be very potential platform, which makes it possible to connect several libraries materials via one common “pipeline”. Existing digitizations get new push via it.

References

[1] Choi, K.-Y., Coüasnon, B., Ricquebourg, Yann, & Zanibbi, R. (2018). Music Symbol Detection with Faster R-CNN Using Synthetic Annotations. In Calvo-Zaragoza, Jorge, J. H. jr, & Pacha, Alexander (Eds.), Proceedings of the 1st International Workshop on Reading Music Systems (pp. 9–10). Paris.