The air was cloudy, but at the final days of the Transcribus project there was a seminar in National Archives of Finland (NAF) going through the outcomes of the project . After welcome words of Maria Kallio and Vili Haukkovaara we went onwards with presentations from the developers and users.

Panel participants of the seminar.

Günther Mülberg from Uni of Innsbruck tells about READ-COOP , about what was the beginning. In the Consortia there were archives doing digitisation, but also universities, some who did manual transcribing and those who are expert in transcribtion and pattern matching.

Facts of Transkribus

- EU Horizon 2020 Project

- 8,2M eur funding

- 1.1.2016- 30.6.2019

- 14 partners coordinated by Uni of Innsbruck

Focus:

- research: 60% – Pattern recognition, machine learning, computer vision,…

- network building: 20% – scientific computations, workshops, support,…

- service: 20% – set up of a service platform.

Service platform:

- digitisation, transcription, recognition and searching hisorical docs

- research infrastructure

User amount has doubled every year.

In 4-11.4.2019

- images uploaded by users 98166; new users 344; active users 890, created docs 866, exported docs 230, layout analysis jobs: 1745, htr jobs 943

- Model training is one major thing

Training data

- Jan 2019: 228 HTR models trained by Transkribus user

- Pages: 204.359; words 21.200.035

- Value: about 120 person years, monetary value ca 2-3 Million EUR

Co-op society SCE presentation

- enable collab of independent institution

- distributed ownership and sharing of data, resources and knowledge in the focus

Main features of an SCE

- Mixture between an association and a company

- Members acquire a minimum share of the SCE – 1000/250 Eur

- Open for new members, members can leave anytime

Foundation of READ-COOP 1.7.2019 : founding members are listed on webpage alreay.

“Every institution or private person willing to collaborate with Transkribus is warmly invited to take part”.

E.g. NewsEye project was seen as one collaborative project towards Transkribus in the layout analysis part. Also with NAF there is a name entity recognition effort where things continue.

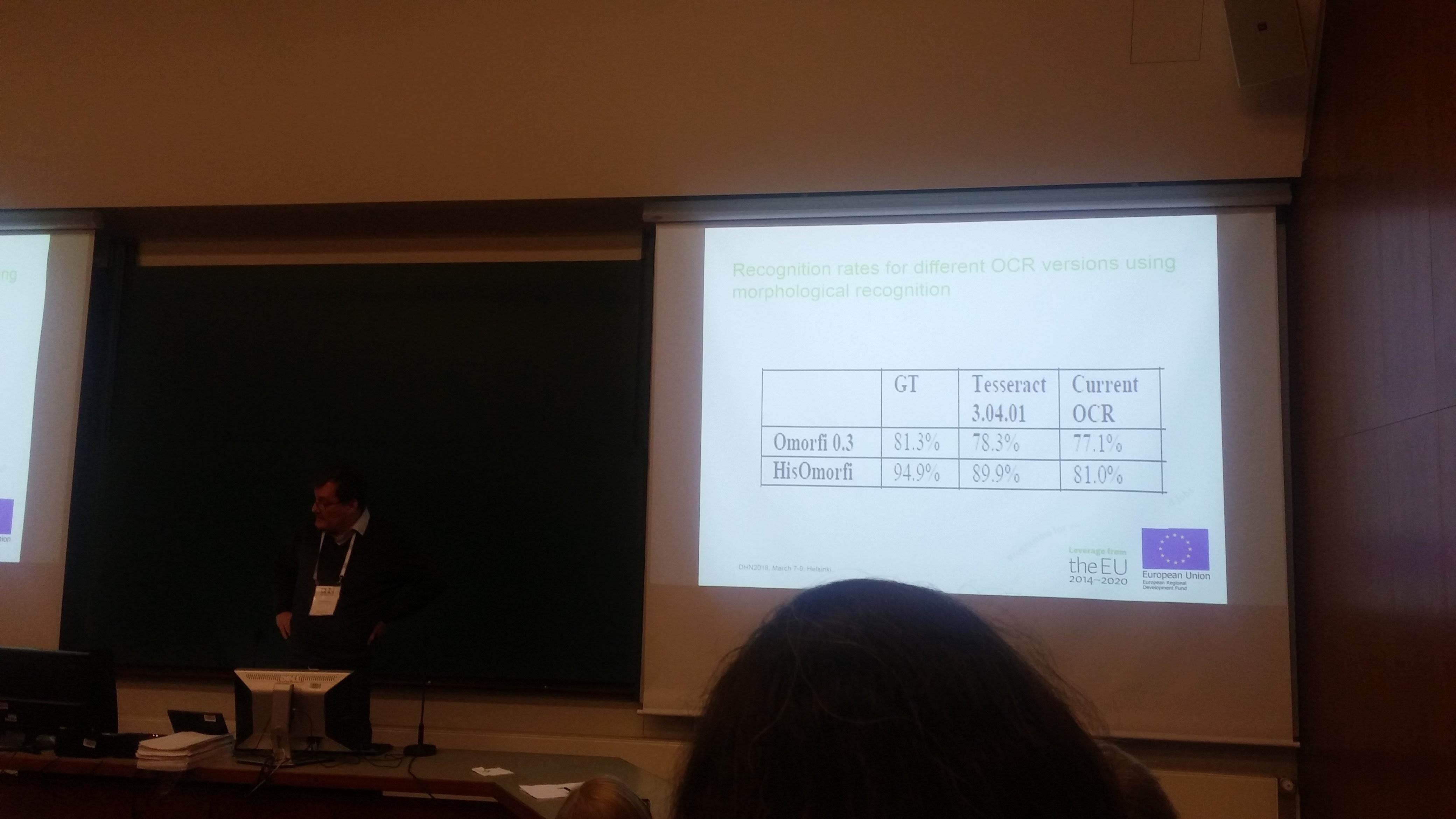

During presentations from the developers of the Transkribus platform and the users it became evident that the software has indeed matured. The character error rates were at 10% of at best can be less than 3%, so system has evolved considerably. One interesting topic might also be keyword spotting (KWS), where even with poor OCR the search is still able to find the particular word of interest from the data.

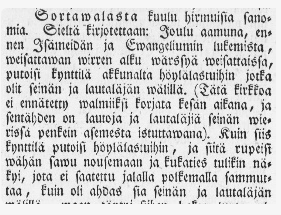

Interesting also was the ‘text-to-image’ where it might/should be possible to “align” a clear text and its corresponding page image – this could help as in some cases blogs or even wikipedia can have corrected text but it is separated from the original collection.

Also the segmentation tool (dhSegment) looked promising, as sometimes the problem is there, and the tool supports diverse documents from different time periods. There via masks done to the page, the neural network can be thought to find anfangs , illustrations or whatever part in the page is the target. The speaker also told that if the collections are homogeneous they might not need even that much training data.

As of today, the Transkribus changes to a co-operative (society), so it needs to be seen how the project outcomes evolve after the project itself has ended.