Digitalia project participated to the #SSDA2018 conference, where aim was to gather up researchers who work with document analysis of various materials, and this time the seminar consisted of roughly 40 people, from all over the world.

Summer school was started with Jean-Marc Ogier, who set the table with interesting examples of past and present in document analysis and how the change in media cause new research fields to appear. From digitized materials to born-digital, from physical signatures to online signatures and so forth, which enable also capturing new information. presented the International Association for Pattern Recognition (IAPR) working groups. There IAPR TC-10 is Technical Committee on Graphics Recognition and IAPR TC-11 is about Reading Systems. Finland is part of the IAPR via the Pattern Recognition Society of Finland.

There was also an introduction round to the L3i laboratories, where various researchers gave us bit of time and presented their work via demos. We got a glimpse of equipment that was used in the lab, and various research done at the lab.

One demo was about segmentation and text capturing of comics , i.e. recognition of individual panels and then the text and also characters who is speaking in the comic. The material for which it was developed were various online comics and some digitized ones. It was patterns all the way, first detecting contours of the panel, then the characters, and also the speech balloons, which were also then processed in order to get the text from the balloons.

Second demo was about transcribing video, the system picked 1 frame from each second of the given input video, and it then transcribed both the content on the captured image and also the audio. Then with help of search engine it was possible to find certain text just via search.

One demo was done as an experiment to monitor the traffic in the harbor. The system detected the moving ships coming or leaving : it detected the boat from the moving image and thusly enabled creation of statistics, how many boats arrived and how many left. This worked in real-time, highlighting boat in the video feed, but also mapping them to the map of the harbor area, which could be very useful in a dashboard. Second live video feed system recognized faces, and aimed to capture sentiments, for example : surprised, content . There were 6 basic feelings, which the system gave ‘bars’ based on the probability.

Bart Lamiroy from University of Lorain talked about familiar concern “Honest, Reproduciple Reporting of Results is Difficult … an Analysis and some Good Practices” about how to do good research and how to spot it when you see it. There the familiar issues, which software, various modules and how fast things change, make it harder for the next researcher to reproduce the results – there are many tiny details, which the publication might not include and there also the tenet of “publish and perish” can create pressures for the researchers, which make them unable to use enough time to documentation, which could be invaluable for the next reader.

Andreas Fischer from University of Fribourg, Switzerland then took the participants to the structural methods of handwriting analysis. Their research team had worked e.g. with George Washington’s letters from Library of Congress. In the Lab the Diva Services http://divaservices.unifr.ch/data attempted to create a platform where a researcher could give data/methods for other researchers to use, thus easing the amount of software needed to be installed to own machine. Diva Services enable processing remotely via specified APIs, first researcher can upload documents (i.e. page images) to the service and then call API of a method and to get results via that api. Very nifty idea of a system and one more implementation for these analysis systems for researchers. Currently can be used for small experiments as the service is currently powered by one machine at the moment. Anyhow enough so that one participant could use its methods to distinct between different Japanese script characters, which in the end won her the excellence award of the summer school.

Poster session

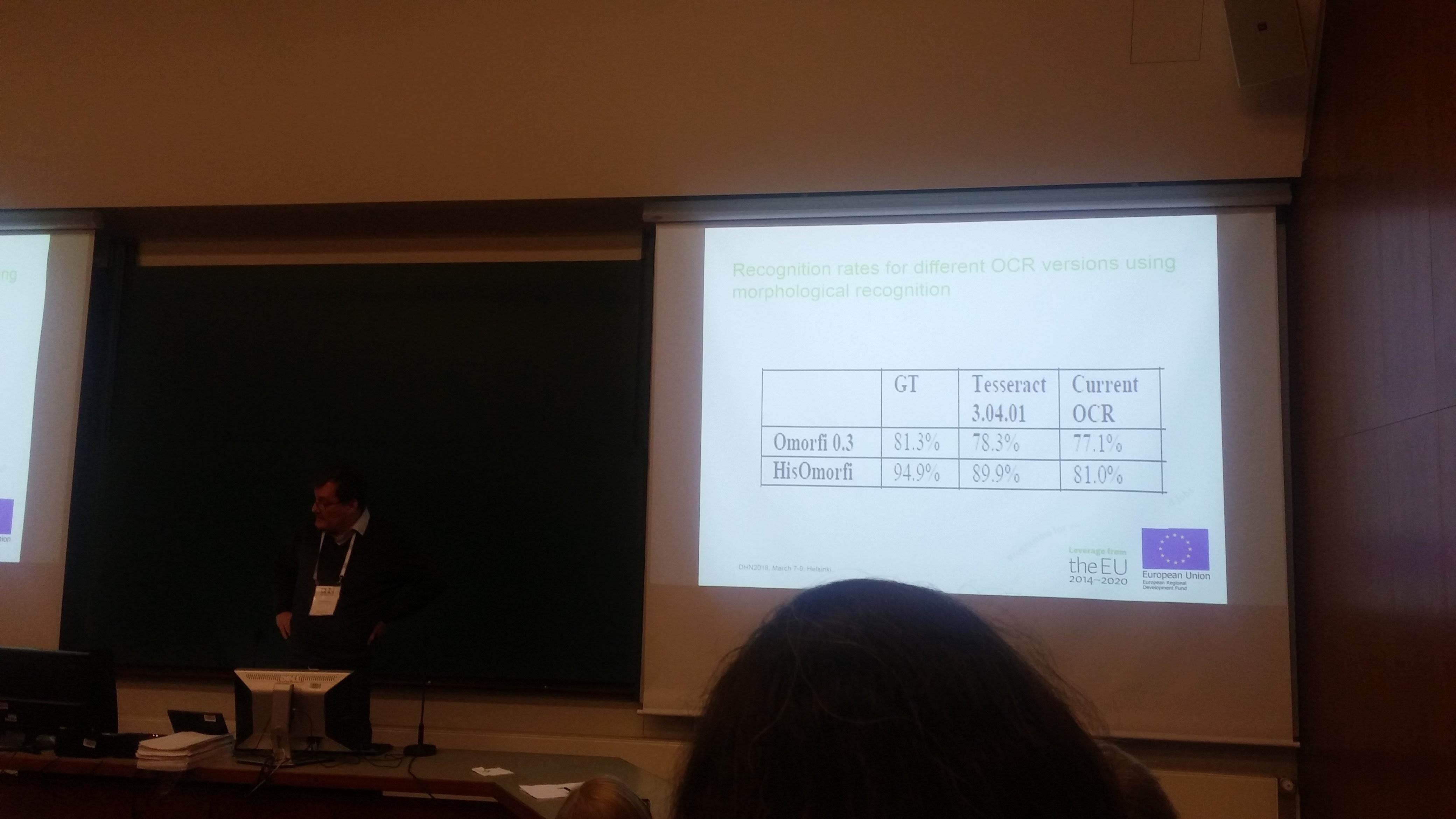

Poster session was also eye-opening – so different kinds of problems where machine learning was used when possible. There were OCR issues, very fragile materials like palm leaves, script and languages for which there does not even exist unicode fonts. Also team sizes varied, some were alone in their university, but then in one lab there was 25 people doing research. In any case, hearing about research done elsewhere was interesting, and some things need to be followed later on, too.

Deep Neural Networks and data

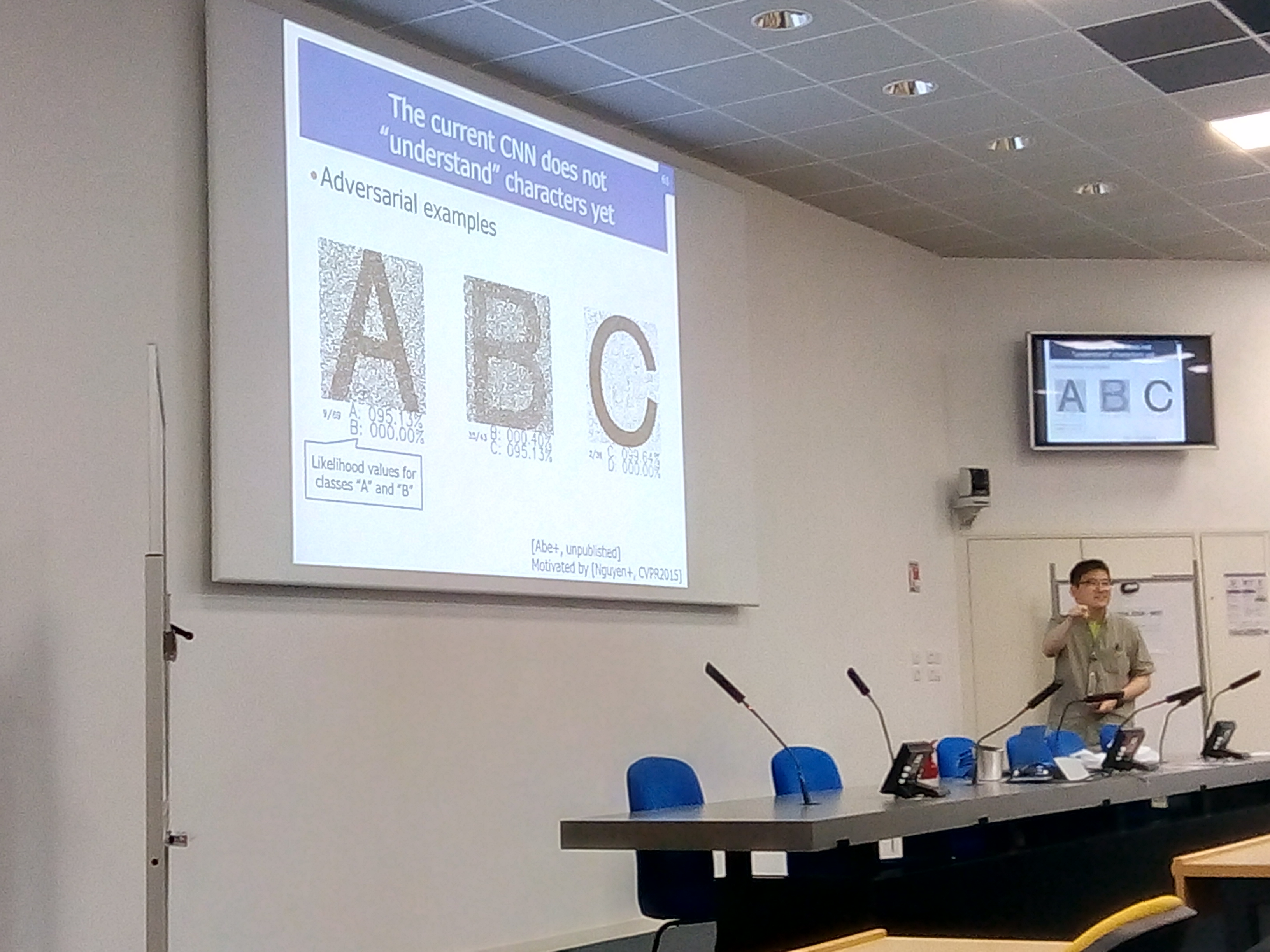

Seiichi Uchida from Kyushu University (Japan) walked us in to the deep neural networks in document analysis and actively promoted the thinking to go beyond 100% accuracy. His slides can be viewed from below:

Vincent Poulain-d’Andecy from YOOZ (France) gave the industrial talk with multitude of examples of all kinds of document types and formats we actually live with. For example IAPR’s TC10 and TC11 has a number of datasets, which researchers can use as a baseline to check the capabilities of own algorithm, against given data set , thusly giving a joint baseline and benchmark which can help in evaluating how well a specific algorithm works.

David Doermann from University of Buffalo (USA) talked about the Document Forensics in document analysis, and about the current situation, where images, videos and audio can be created in such a way, that a real thing can be impossible to distinct from a fake. He defined the terminology, and gave various examples from different centuries how forgeries and tamperings have been tried, and on the other hand how they are and were being prevented. A comporting thought anyway was that :

“It only takes one piece of inconsistent evidence to prove something is not authentic”

He highlighted also how unique each persons handwriting actually is, as each writer has hard time to change ingrained habits, as he mentioned that letter design, spacing and proportions rarely change, and for untrue cases, there are properties which experts can follow.

Marçal Rusiñol from CVC, Universitat Autònoma de Barcelona,Spain told the details of Large-scale document indexing, going bit deeper how the indexing actually works. He had good points on how to make things work to the end-user, i.e. how to improve human computer interaction, and how to rank documents about semantic similarity for a query which goes bit beyond just the search word given by the user.

Dimosthenis Karatzas from CVC, Universitat Autònoma de Barcelona,Spain then talked about urban world and the scene text understanding – how to detect text from noisy and vibrant environment, which is important as he said that over 50% of urban imaginery contains text in some form. Turned out that trick is in a way in a context, text has some typical features like alignment and characters, which make them distinct even if the observer would not necessarily know the language in question. Many machine-learning algorithms are suitable for the word and object spotting, but the desire was to get to higher level to the true information spotting. There the idea is that machine could answer questions like “what is the price of a 1 litre of milk” from any shop. For this they had generated simulated 3D traffic environment where the autonomous cars can be immersed to various weather, and traffic simulations to test it and now the idea was to create simulated “supermarket” where before mentioned information spotting could be trained upon.

Jean-Yves Ramel, Lifat, University of Tours (France) talk was titled “Interactive approaches and techniques for Document Image Analysis” compared the computer vision and the document image analysis problems and solutions, which turned out to have quite a many similarities. He highlighted the service aspect of a tool or algorithm, it would be beneficial to let domain experts to fine-tune the algorithms and define parameters, which are meaningful in certain context. By introducing the user, which in fact is the other researcher, would enable them to do more, in a limited time, with a given dataset. In short, researcher should be part of the defining of services which are meant for them to ensure that the features and data is available as needed.

Jean-Christophe Burie from University of La Rochelle kept then the last talk and a workshop where the idea was to experiment with comics, and their segmentation to the speech bubbles. This was one of the demos of the tour of the L3i laboratory of the university so it was interesting to experiment with it even for a short while. Python, opencv, were in use for the image segmentation, and Tesseract was used for then extracting the text part from the speech balloons in the comics.

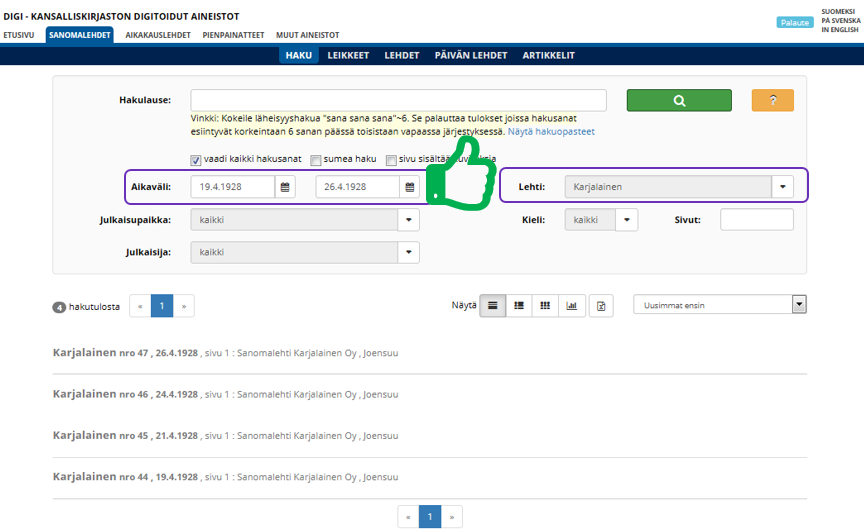

Pick date and newspaper if want to target that specific one. Free text search and all of its options are useful if you have specific search idea, but want to see across newspapers (or journals) what is found from it.

Pick date and newspaper if want to target that specific one. Free text search and all of its options are useful if you have specific search idea, but want to see across newspapers (or journals) what is found from it.